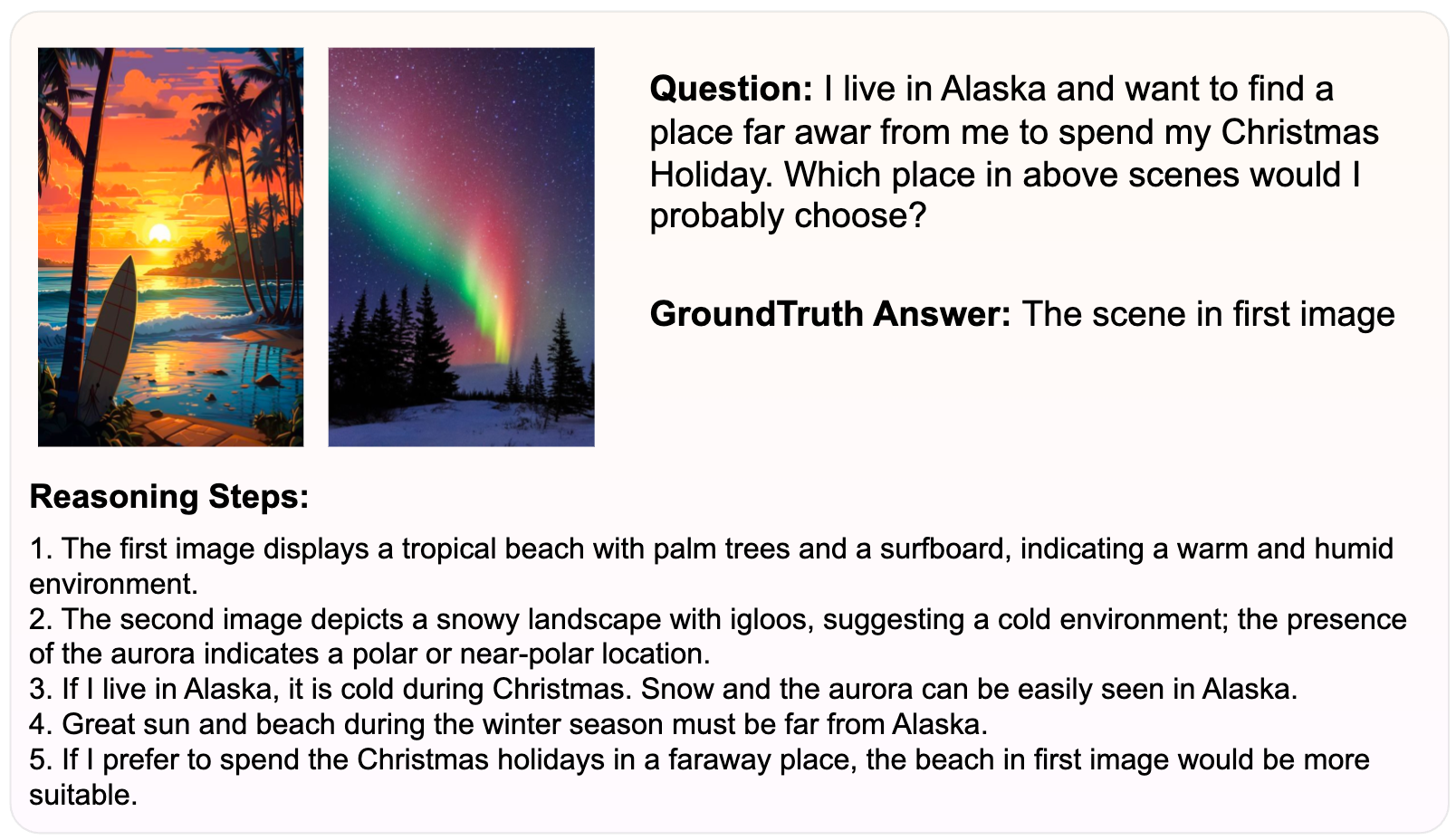

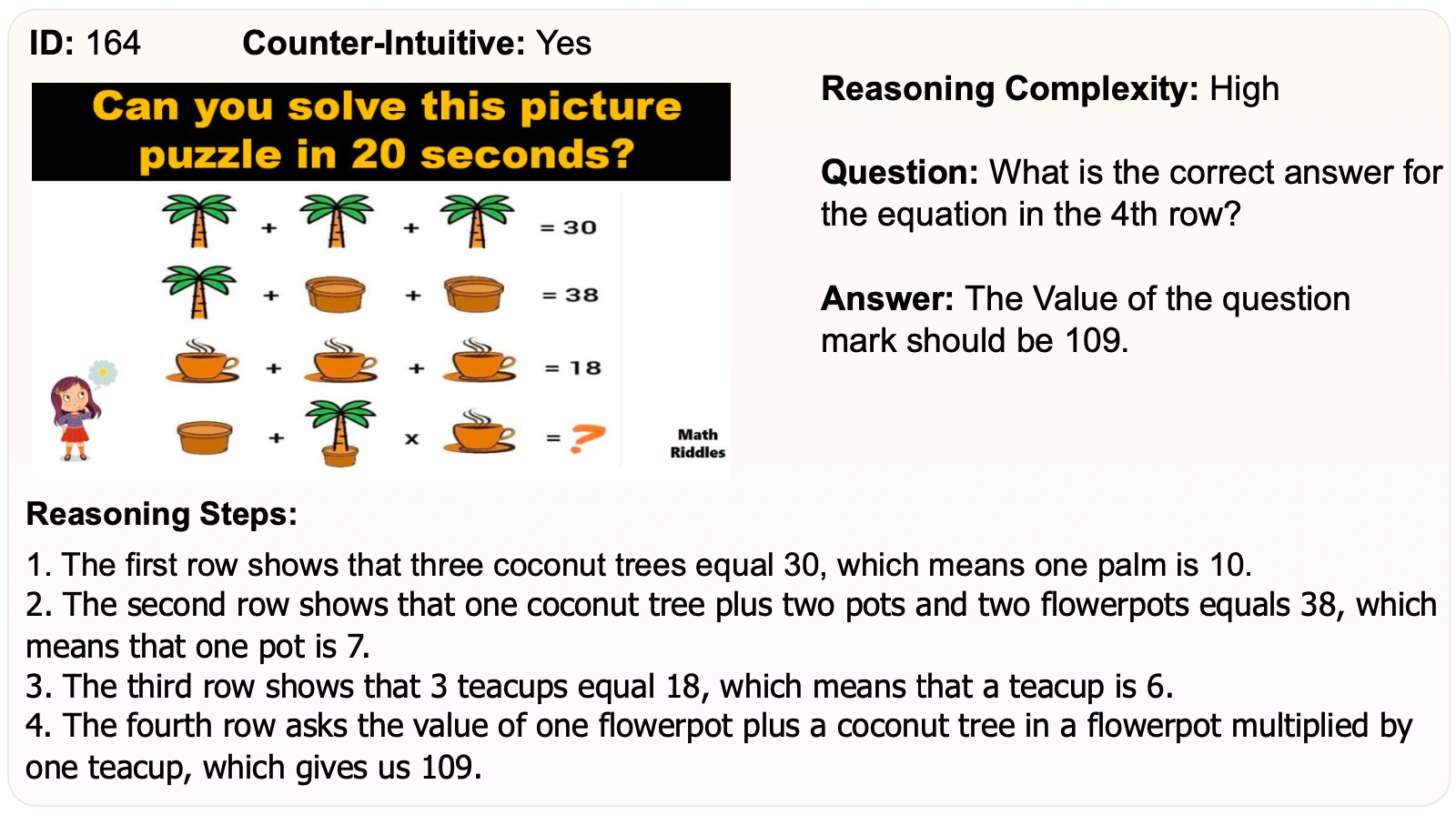

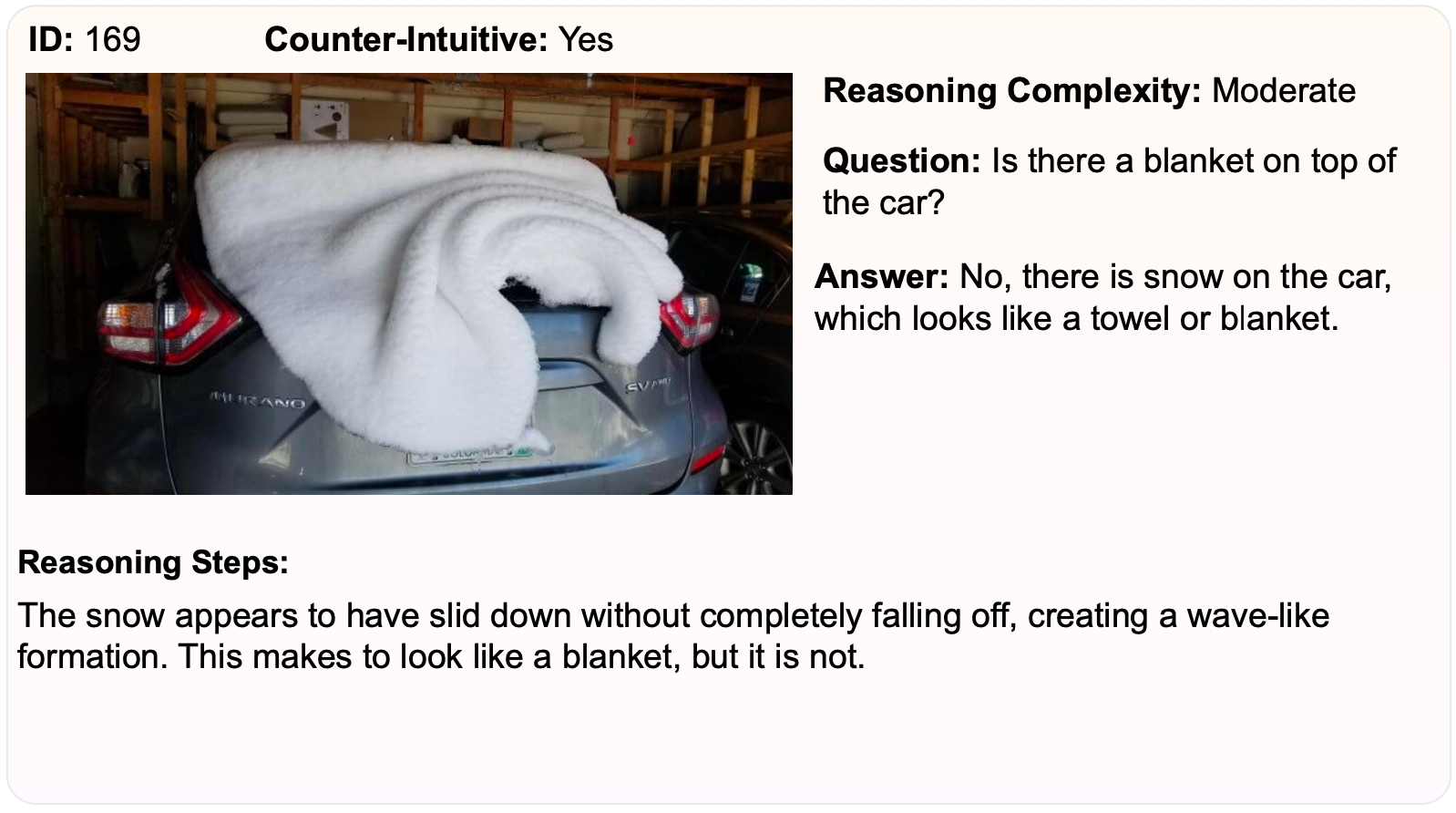

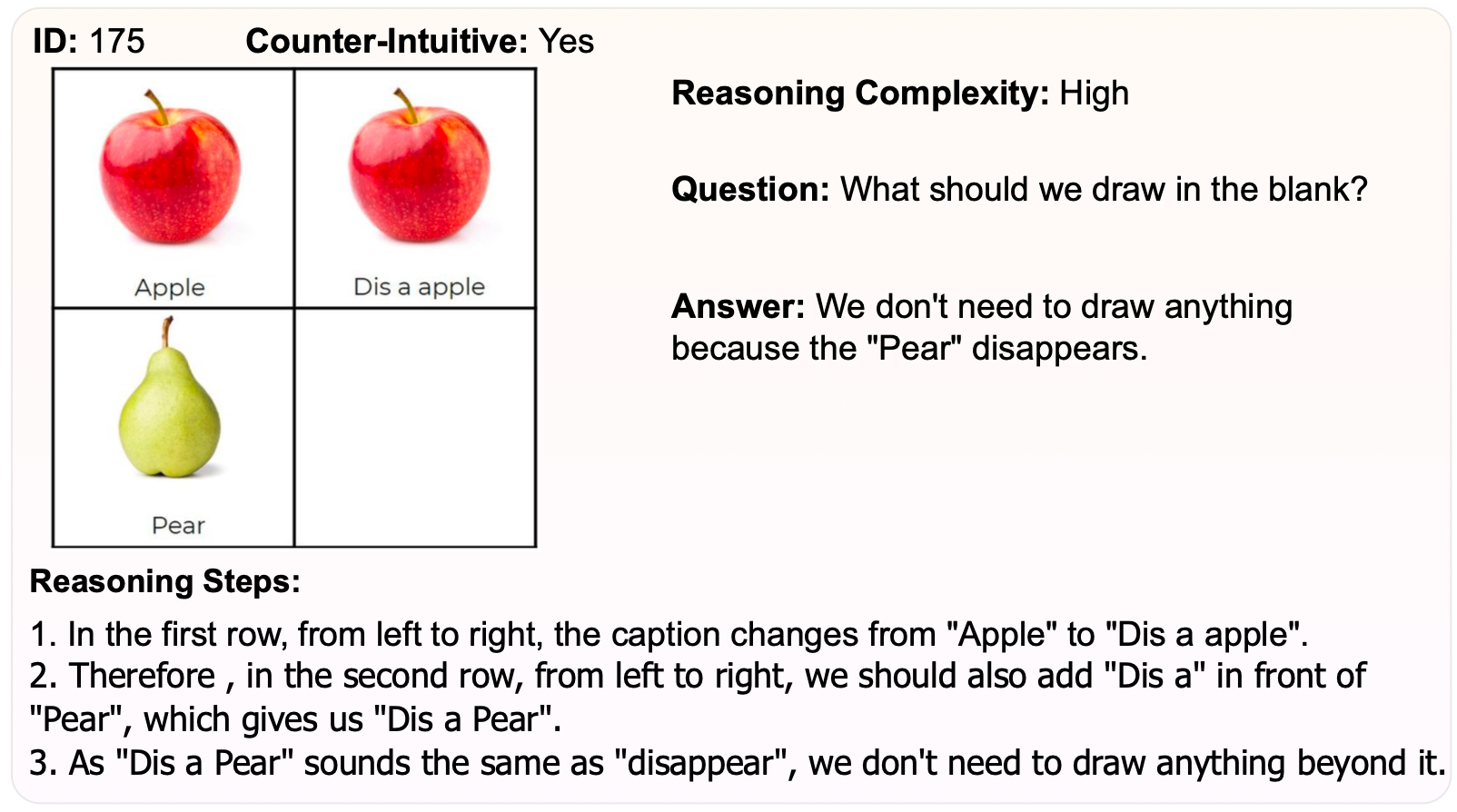

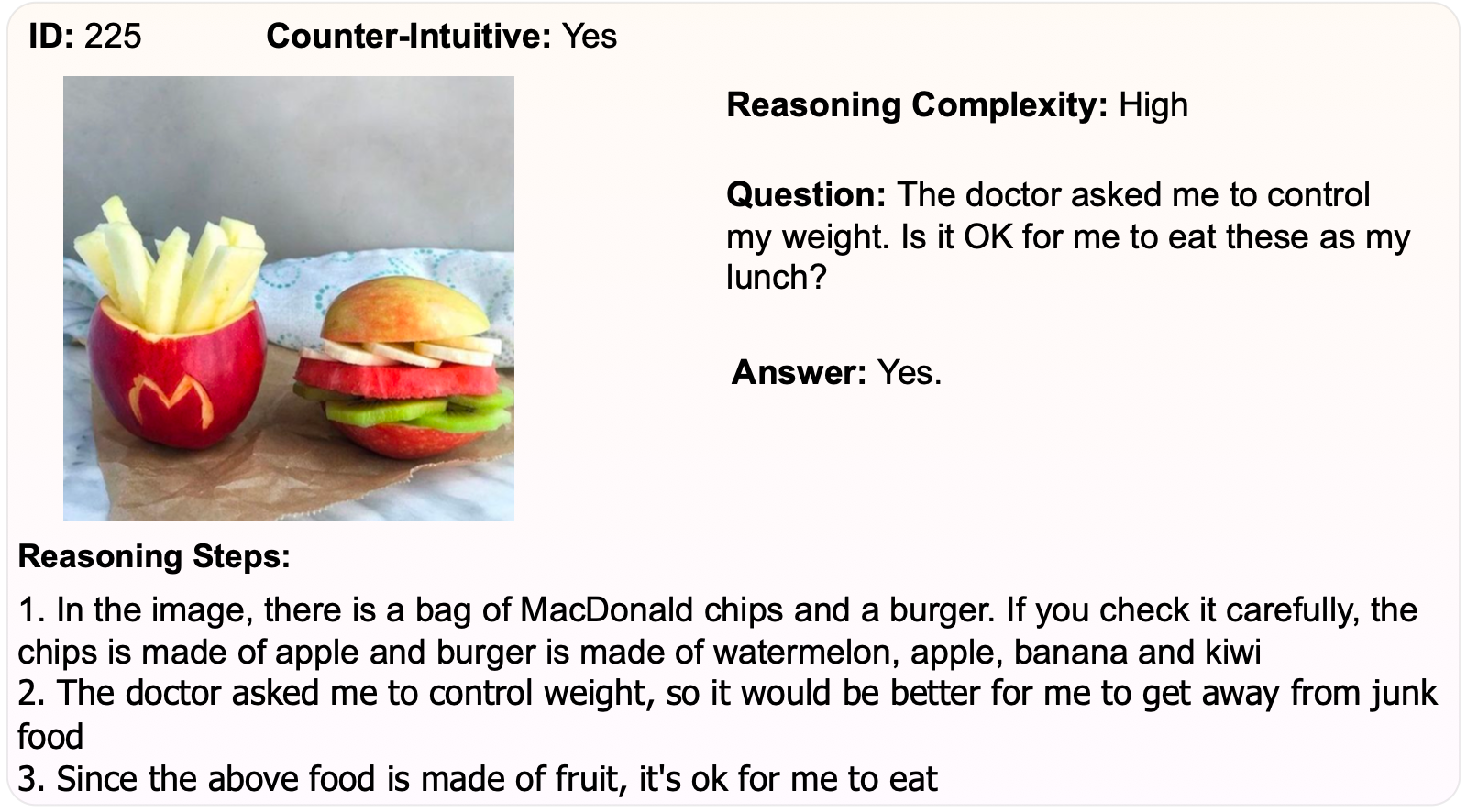

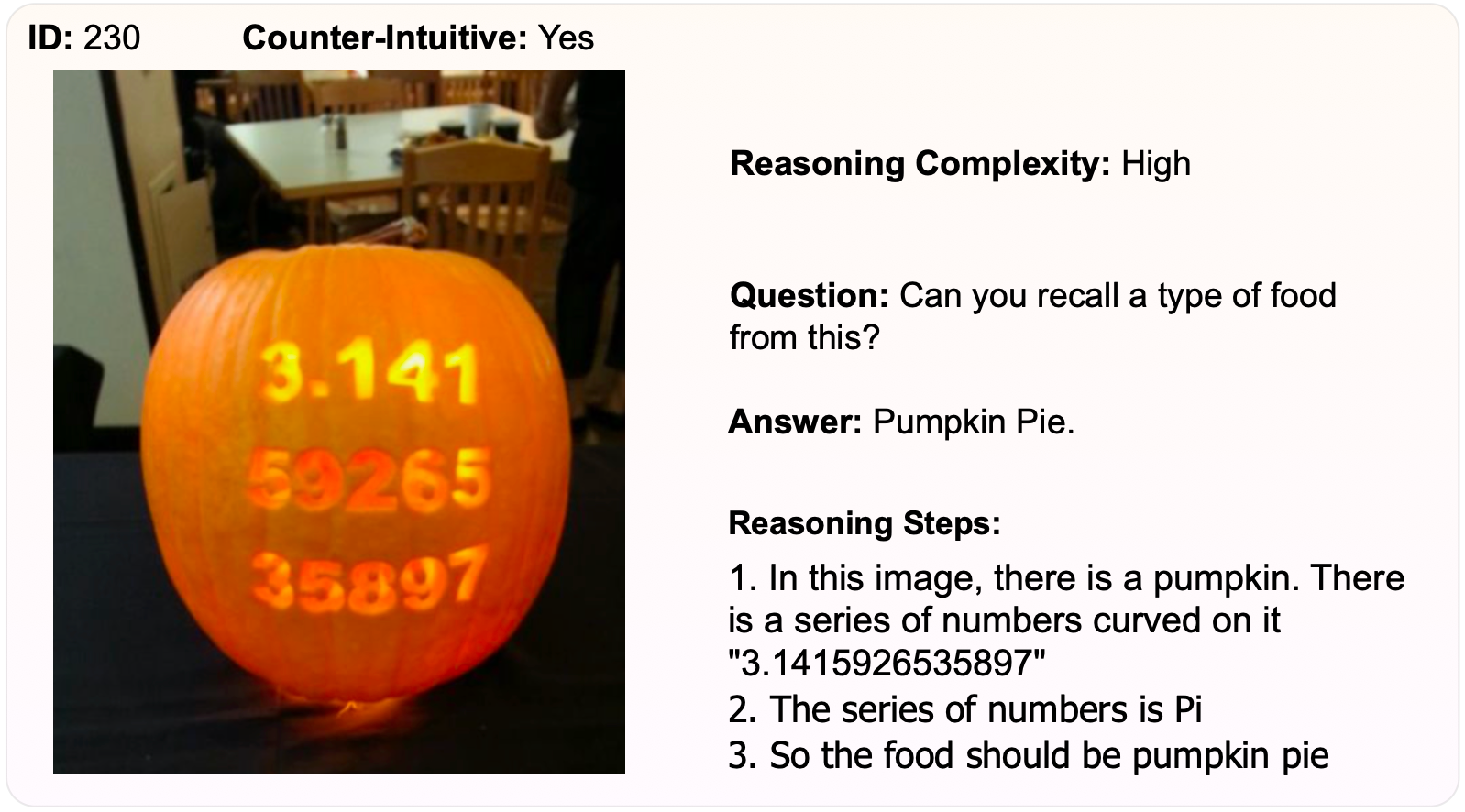

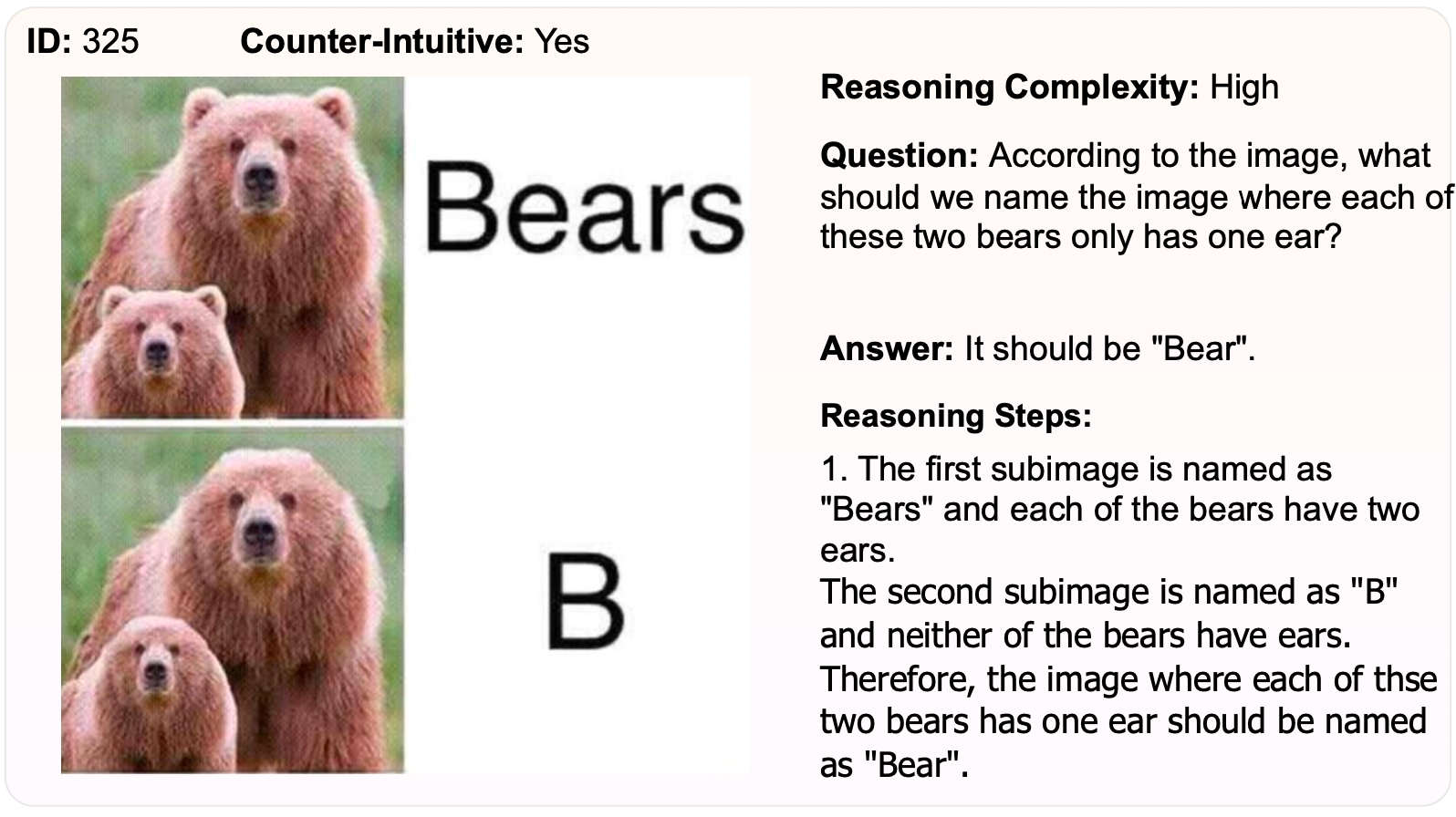

Multi-modal Large Language Models (MLLMs) are increasingly prominent in the field of artificial intelligence. These models not only excel in traditional vision-language tasks but also demonstrate im- pressive performance in contemporary multi-modal benchmarks. Although many of these benchmarks attempt to holistically evaluate MLLMs, they typically concentrate on basic reasoning tasks, often yielding only simple yes/no or multi-choice responses. These methods naturally lead to confusion and difficulties in conclusively determining the reasoning capabilities of MLLMs. To mitigate this issue, we manually curate a benchmark dataset specifically designed for MLLMs, with a focus on complex reasoning tasks. Our benchmark comprises three key reasoning categories: deductive, abductive, and analogical reasoning. The queries in our dataset are intentionally constructed to engage the reasoning capabilities of MLLMs in the process of generating answers. For a fair comparison across various MLLMs, we incorporate intermediate reasoning steps into our evaluation criteria. In instances where an MLLM is unable to produce a definitive answer, its reasoning ability is evaluated by requesting intermediate reasoning steps. If these steps align with our manual annotations, appropriate scores are assigned. This evaluation scheme resembles methods commonly used in human assess- ments, such as exams or assignments, and represents what we consider a more effective assessment technique compared with existing benchmarks. We evaluate a selection of representative MLLMs using this rigorously developed open-ended multi-step elaborate reasoning benchmark, designed to challenge and accurately measure their reasoning capabilities.

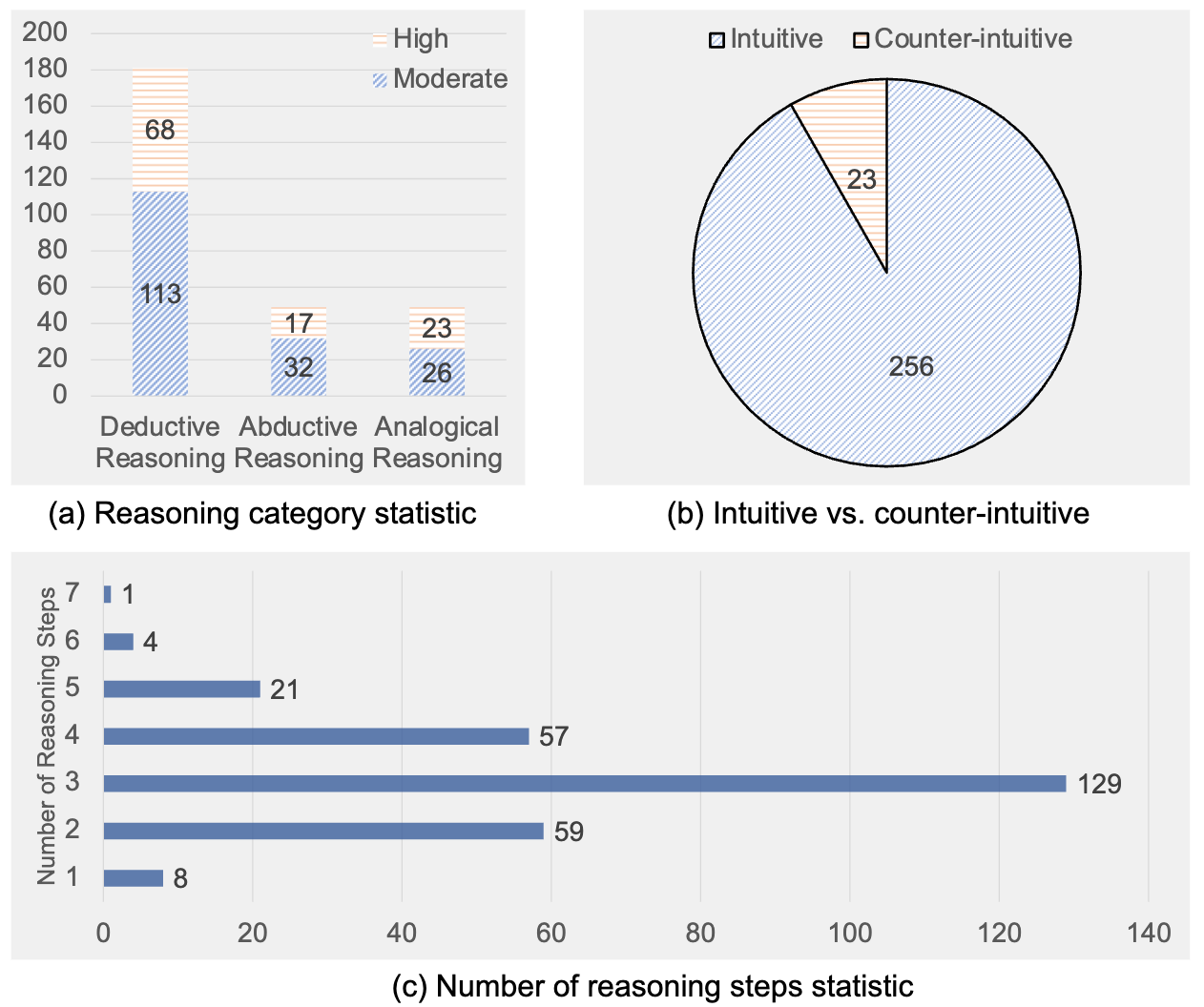

InfiMM-Eval benchmark consists of 279 manually curated reasoning questions, associated with a total of 342 images. The questions are divided into 3 reasoning categories--Deductive, Abductive and Analogical. 49 questions pertain to abductive reasoning, 181 require deductive reasoning, and 49 involve analogicalreasoning. Furthermore, the dataset is divided into two folds based on reasoning complexity, with 108 classified as “High” reasoning complexity and 171 as “Moderate” reasoning complexity.

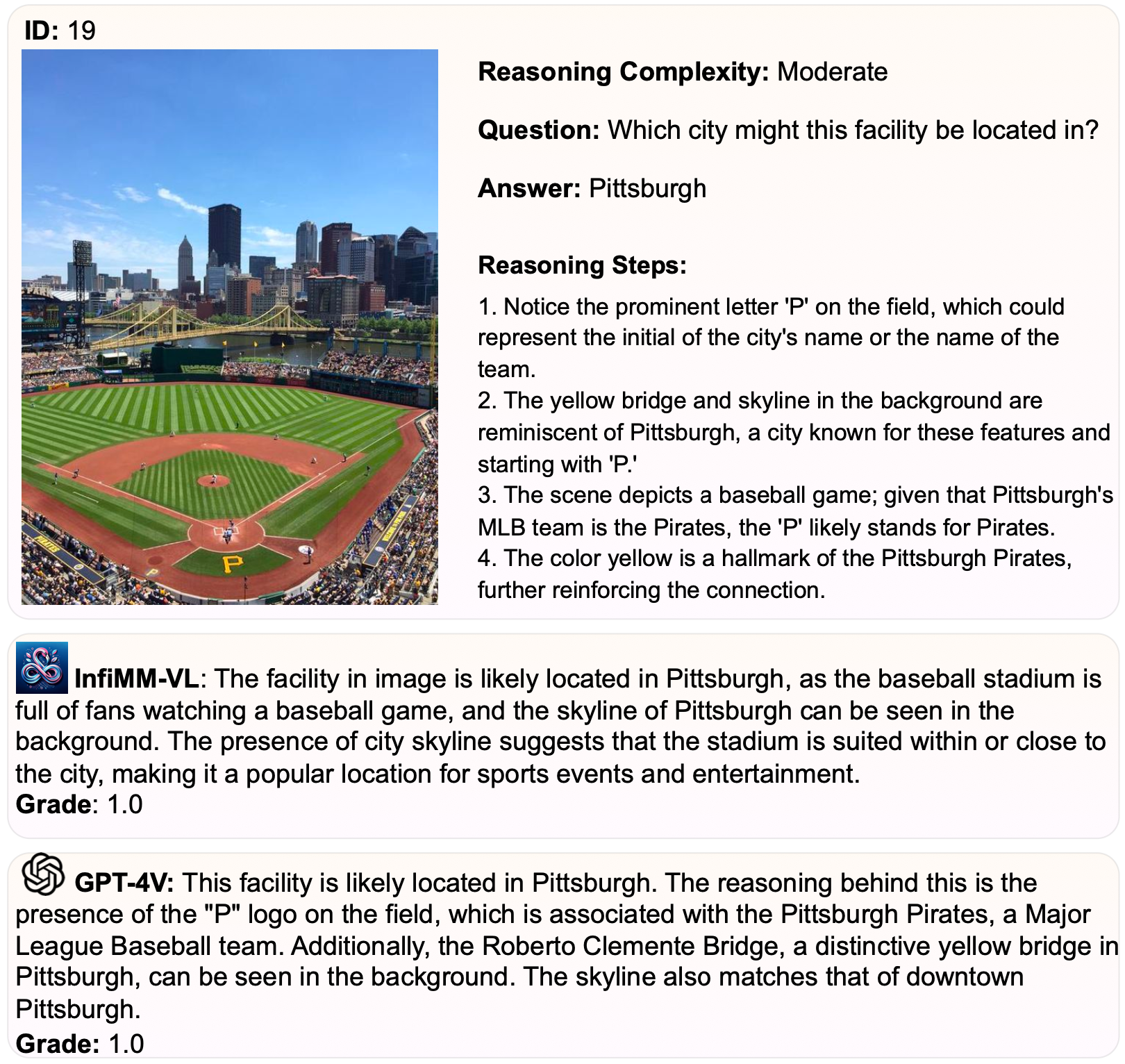

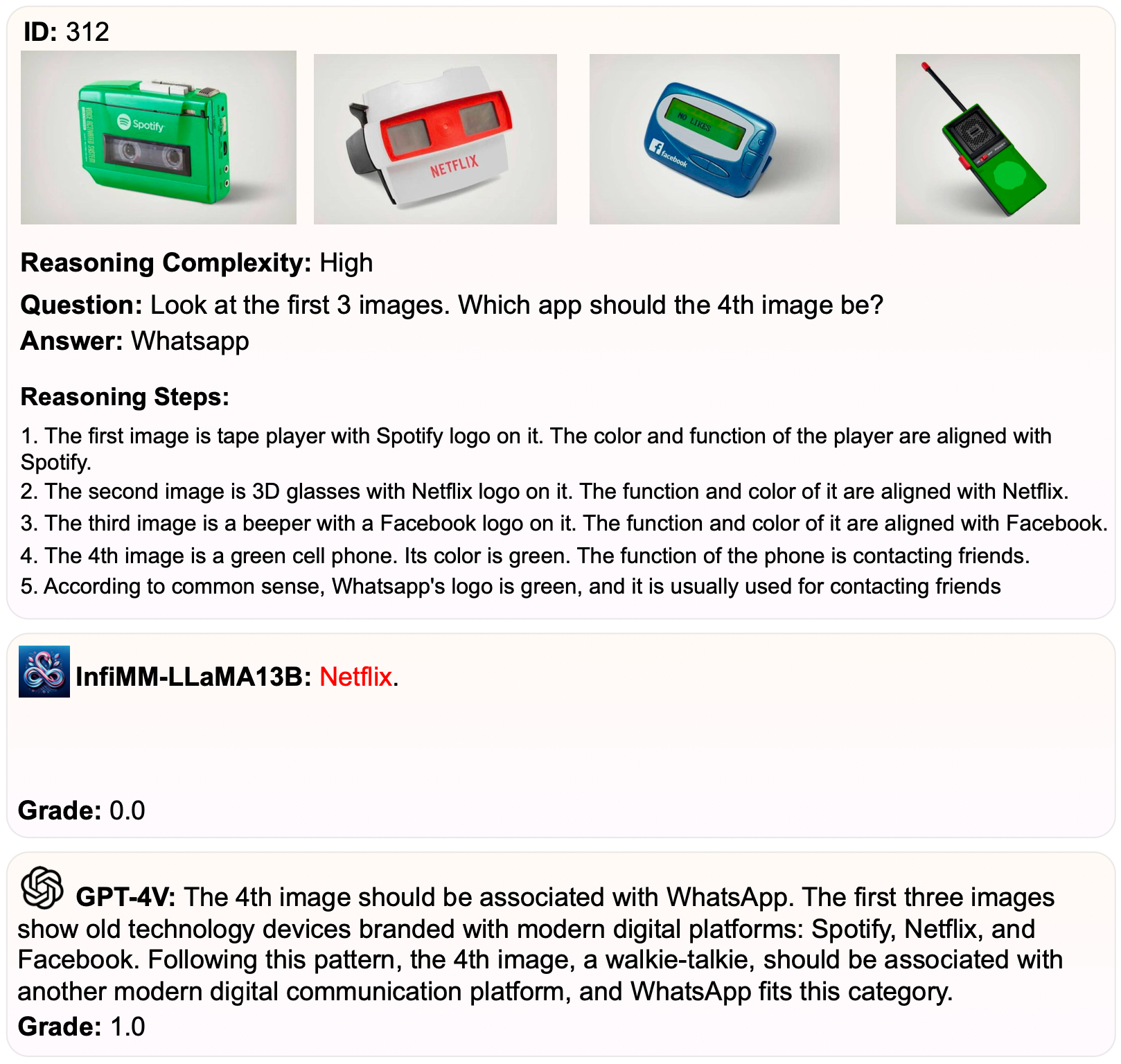

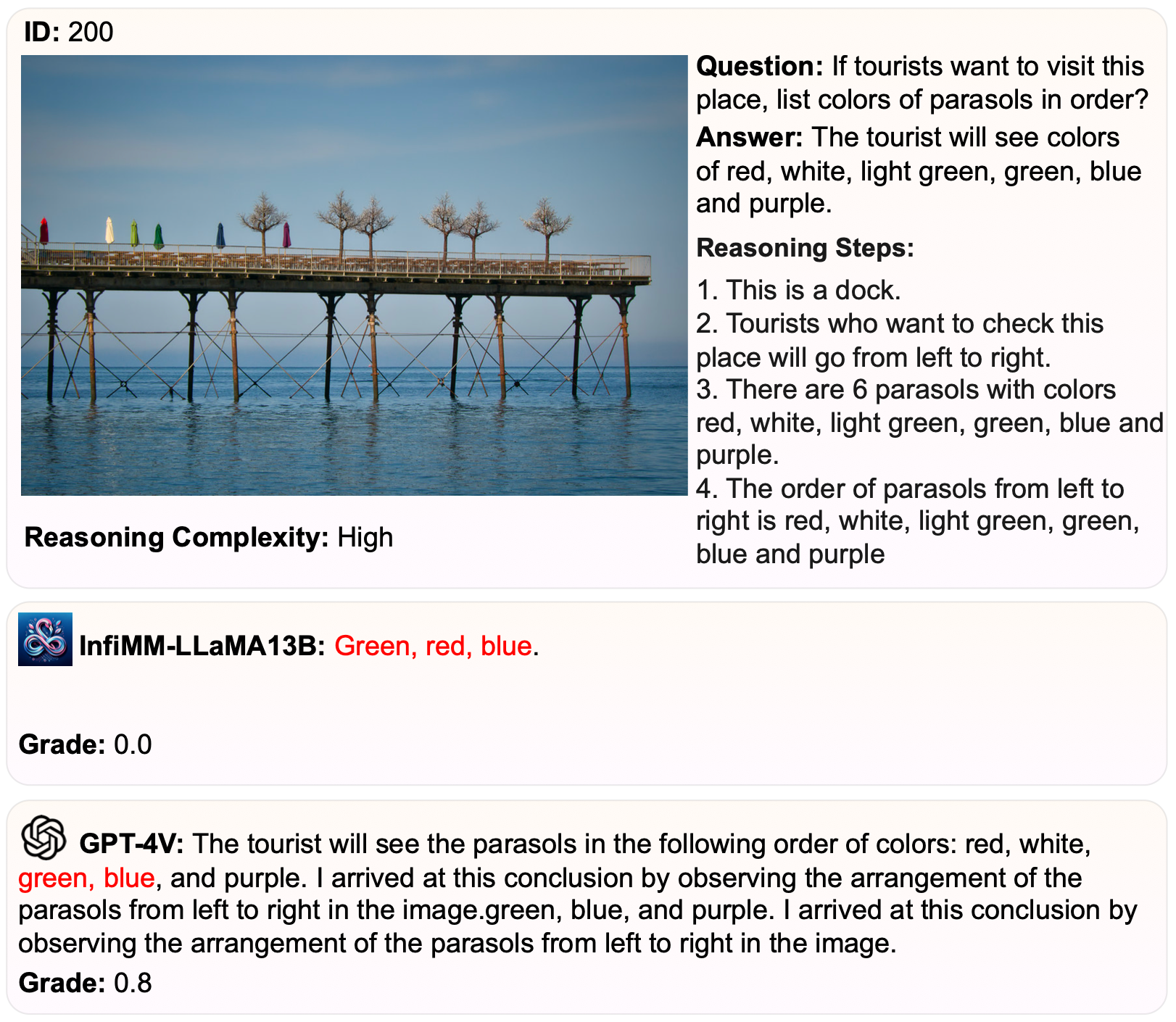

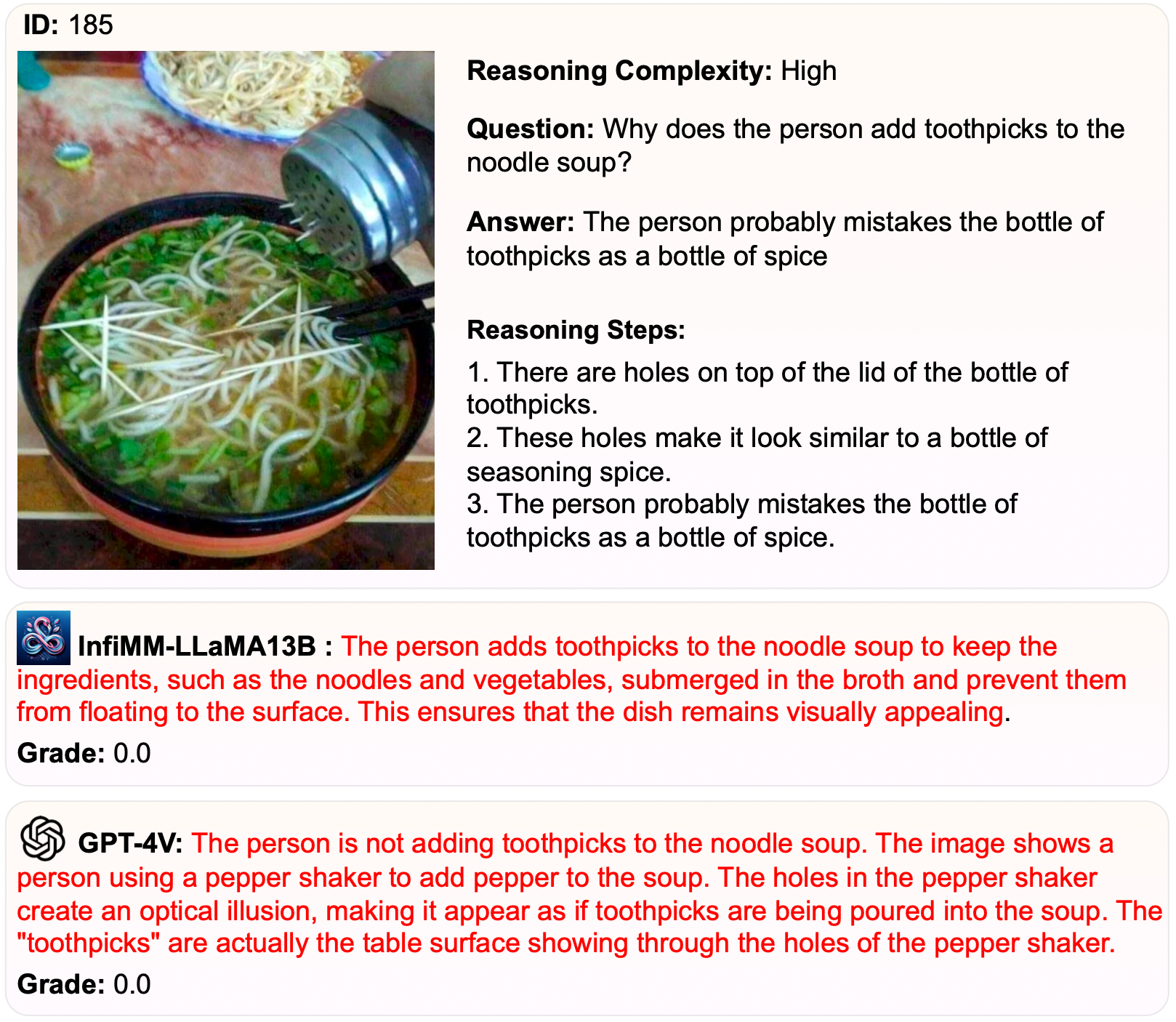

Sampled predictions of models on InfiMM-Eval. Model hallucinations are labelled in red.

| MLLM | LLM | IFT | Reasoning Category | Reasoning Complexity | Overall | |||

|---|---|---|---|---|---|---|---|---|

| Deductive | Abductive | Analogical | Moderate | High | ||||

| OpenFlamingo-v2 | MPT-7B | No | 8.88 | 5.3 | 1.11 | 9.47 | 4.72 | 6.82 |

| MiniGPT-v2 | LLaMA2-7B | Yes | 11.02 | 13.28 | 5.69 | 14.45 | 7.27 | 10.43 |

| Fuyu-8B | Persimmon-8B | No | 16.42 | 21.49 | 7.78 | 23.06 | 9.91 | 15.7 |

| BLIP-2 | OPT-2.7B | No | 22.76 | 18.96 | 7.5 | 24.05 | 14.18 | 19.31 |

| InternLM-XComposer-VL | InternLM-7B | Yes | 26.77 | 35.97 | 18.61 | 39.13 | 17.18 | 26.84 |

| InstructBLIP | FLAN-T5-XXL | Yes | 27.56 | 37.76 | 20.56 | 40.64 | 18.09 | 28.02 |

| LLaMA-Adapter V2 | LLaMA-7B | No | 28.7 | 46.12 | 22.08 | 41.33 | 21.91 | 30.46 |

| Otter | LLaMA-7B | Yes | 22.49 | 33.64 | 13.33 | 35.79 | 12.31 | 22.69 |

| mPlug-Owl2 | LLaMA-7B | Yes | 23.43 | 20.6 | 7.64 | 28.79 | 13.18 | 20.05 |

| IDEFICS-9B-Instruct | LLaMA-7B | Yes | 22.99 | 34.63 | 20.56 | 34.45 | 16.73 | 24.53 |

| Emu | LLaMA-13B | Yes | 28.9 | 36.57 | 18.19 | 36.18 | 22.0 | 28.24 |

| LLaVA-1.5 | Vicuna-13B | Yes | 30.94 | 47.91 | 24.31 | 47.4 | 21.0 | 32.62 |

| CogVLM-Chat | Vicuna-7B | Yes | 36.75 | 47.88 | 28.75 | 55.67 | 22.5 | 37.16 |

| Qwen-VL-Chat | Qwen-14B | Yes | 37.55 | 44.39 | 30.42 | 46.61 | 30.09 | 37.39 |

| SPHINX v2 | LLaMA2-13B | Yes | 42.17 | 49.85 | 20.69 | 54.85 | 27.31 | 39.48 |

| InfiMM-LLaMA13B | LLaMA2-13B | Yes | 41.69 | 49.70 | 32.36 | 61.81 | 25.09 | 41.32 |

| GPT-4V | GPT-4 | Yes | 74.86 | 77.88 | 69.86 | 93.98 | 58.98 | 74.44 |

To evaluate on our InfiMM-Eval Benchmark, please follow below steps:

Images and Questions can be downloaded

Here.

Generate responses for your model on the InfiMM-Eval dataset. The response should be a json file with the following format:

{

"1": "the answer of question 1",

"2": "the answer of question 2",

...

"idx": "the answer of question idx"

}

After generating responses for your model, please name the json

as model_name_model_size.json e.g.

CogVLM-Chat_17B.json and send to us via

email for

evaluation.

We will evaluate your model and send you the results back.

@misc{han2023coremm,

title={InfiMM-Eval: Complex Open-Ended Reasoning Evaluation For Multi-Modal Large Language Models},

author={Xiaotian Han and Quanzeng You and Yongfei Liu and Wentao Chen and Huangjie Zheng and Khalil Mrini and Xudong Lin and Yiqi Wang and Bohan Zhai and Jianbo Yuan and Heng Wang and Hongxia Yang},

year={2023},

eprint={2311.11567},

archivePrefix={arXiv},

primaryClass={cs.CV}

}